Ongoing research

The Social Robots Team proudly presents the exciting research taking place in the lab! Below you can find a short description of ongoing research and the current stage of the project. If you want to know more please contact one of the researchers involved. Please check back for future updates.

From automata to animate beings

Why do we at one time perceive a robot as merely an automaton, while at other times as an engaging social being? Can we understand the anger of a robot? In these theoretical projects, we outline how human social cognition shapes and constrains our interaction with robots.

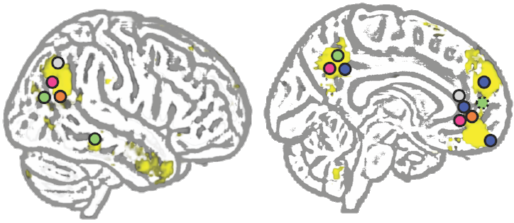

The social neuroscience of human-robot interactions

To what extent do brain regions mediating social interaction with humans also support interactions with our mechanical friends? In this line of research, the team uses neuroimaging to probe the neurocognitive mechanisms of human-robot interaction.

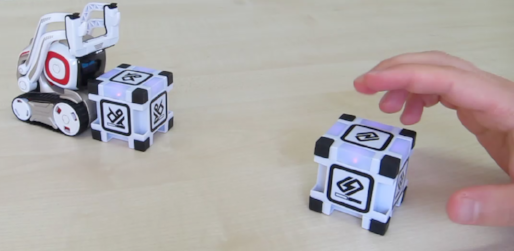

Companion and assistive social robots

To support independent living, and reduce the workload of healthcare professionals, robotic agents are being developed and implemented. Their embodied nature allows them to provide physical assistance (e.g. carrying trays of food), and their technical capabilities (cameras, recorders, sensors, etc.) make them suitable for environment and health monitoring. Robots with social abilities are also being developed, and the suggestion has been made that such robots could provide entertainment, emotional support, and companionship as a result. In the lab, Katie and Guy are investigating the role that such ‘social robots’ may play in the lives of older adults, and informal caregivers (respectively).

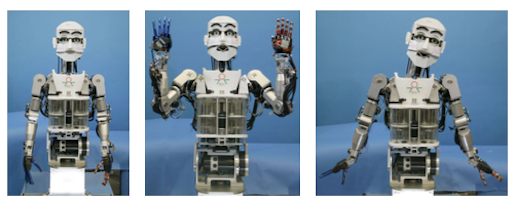

Social cognition during human-robot interaction

What factors influence our thoughts, feelings or actions while interacting with artificial agents, such as robots? In this section, we introduce some of our recent and on-going studies that examine the role of robotic factors such as emotional expressions, human factors such as our cultural background or previous experience with robots, using a range of task set ups from economic games to other forms of social interaction.

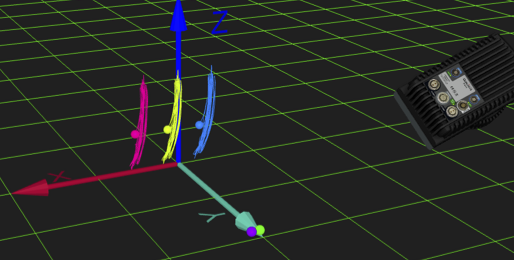

The role of movement in social interactions

A large part of the way in which humans socially interact with one another is through movement. Using everything from hand gestures to dances we are capable of expressing a huge range of intentions and emotions through movement. In order to study this in more detail it is necessary to capture human movements and measure them with a high level of precision and detail. To achieve this, our lab utilizes sophisticated motion-capture technology. Much like the systems used to capture computer generated performances for movies and video games, our two motion capture labs use infrared cameras and light-weight reflective markers to track the positions of participants during our experiments. These cameras can take position measurements up to 300 times a second, making them an invaluable tool for studying the way humans (and robots) move during social interactions.

FNIRS & human-robot interaction

One of the aims of the Social Robots project is to investigate the underlying brain activity of human-robot interaction in real time. We can investigate this question with functional near-infrared spectroscopy (fNIRS). This brain imaging technique takes advantage of the fact that some biological tissue (like bone structure) is invisible in the near-infrared spectrum and oxygenated and deoxygenated blood differ in their absorption spectra. As we can predict the blood oxygen level dependent (BOLD) response of the brain in its active state, as well as the path of light through the biological tissue, we can measure brain activity of the cortex (up to 3cm deep at the moment). FNIRS is a promising brain imaging modality for HRI, as it allows the mobile and relatively low-cost recording of the brain signal.